You can optimize TCP connection in Artica by just enable some kernel parameters, especially if Artica is defined as Proxy, Web server or gateway.

TCP BBR is used by Google Cloud platform successfully and increase the traffic by more 14%

TCP BBR is a TCP congestion control algorithm developed by Google.

It tackles shortcomings of traditional TCP congestion control algorithms (Reno or CUBIC). According to Google, it can achieve orders of magnitude higher bandwidth and lower latency.

TCP BBR is already being used on Google.com, YouTube and Google Cloud Platform and the Internet Engineering Task Force (IETF) has been standardizing this algorithm Since July, 2017. BBR stands for Bottleneck Bandwidth and RTT

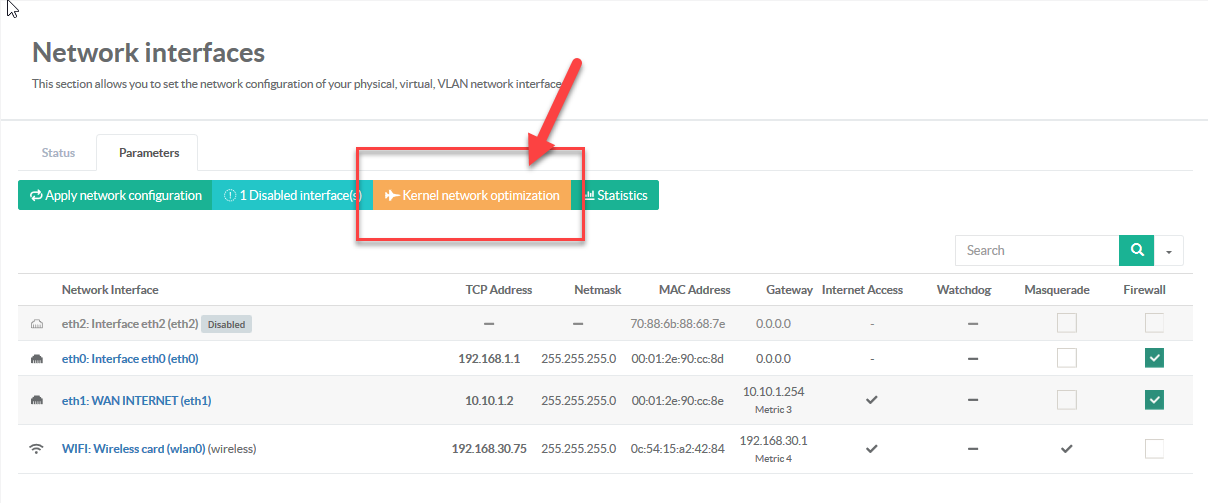

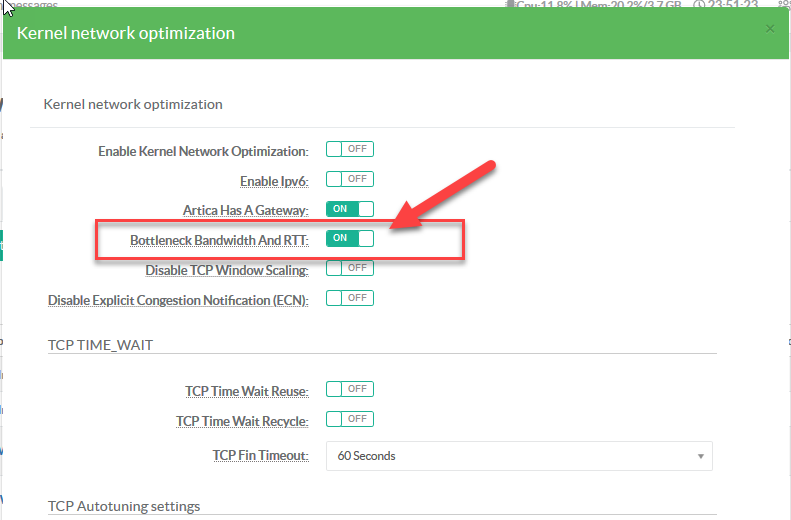

- To enable TCP BBR, on the network section, choose “Kernel Network optimization”

- Turn on the “Bottleneck Bandwidth And RTT” option and click on “Apply” button

¶ TCP Window-Size Scaling

¶ Sending More Data at a Time

To increase TCP performance, the way is to send more data at a time.

As the bandwidth of the network increases, more data can fit into the pipe (network), and as the pipe gets longer, it takes longer to acknowledge the receipt of the data.

This relationship is known as the bandwidth-delay product (BDP).

This is calculated as the bandwidth multiplied by the round-trip time (RTT), resulting in a value that specifies the optimal number of bits to send in order to fill the pipe. The formula is this:BDP (bits) = bandwidth (bits/second) * RTT (seconds)

Computed BDP is used as TCP window size for optimization.

For example, imagine that you have a 10 Gbps network with an RTT of 30 milliseconds.

For the window size, use the value of the original TCP window size (65535 bytes).

This value doesn't come close to taking advantage of the bandwidth capability.

The maximum TCP performance possible on this link is as follows:

- (65535 bytes * 8 bits/byte) = bandwidth * 0.030 second

- bandwidth = (65535 bytes * 8 bits/byte) / 0.030 second

- bandwidth = 524280 bits / 0.030 second

- bandwidth = 17476000 bits / second

To state it another way, these values result in throughput that's a bit more than 17 Mbits per second, which is a small fraction of network's 10 Gbps capability.

To resolve the performance limitations imposed by the original design of TCP window size, extensions to the TCP protocol were introduced that allow the window size to be scaled to much larger values.

Window scaling supports windows up to 1,073,725,440 bytes, or almost 1 GiB.

This feature is outlined in RFC 1323 as TCP window scale option.

The window scale extensions expand the definition of the TCP window to use 32 bits, and then use a scale factor to carry this 32-bit value in the 16-bit window field of the TCP header

You can use the previous example to show the benefit of having window scaling.

As before, assume a 10 Gbps network with 30-millisecond latency, and then compute a new window size using this formula :(Link speed * latency) / 8 bits = window size

If you plug in the example numbers, you get this:(10 Gbps * 30ms/1000sec) / 8bits/byte = window size(10000 Mbps * 0.030 second) / 8 bits/byte = 37.5 MB

Increasing the TCP window size to 37 MB can increase the theoretical limit of TCP bulk transfer performance to a value approaching the network capability.

Of course, many other factors can limit performance, including system overhead, average packet size, and number of other flows sharing the link, but as you can see, the window size substantially mitigates the limits imposed by the previous limited window size.

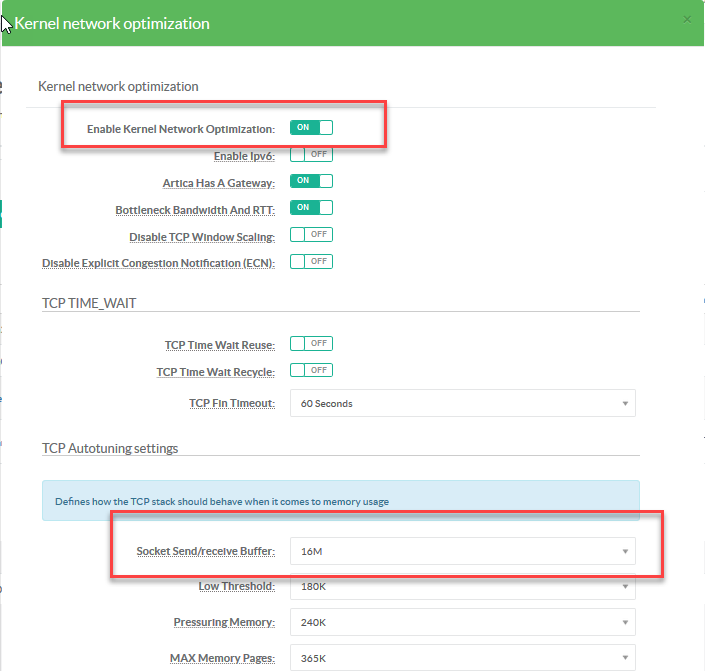

To change the TCP windows size :

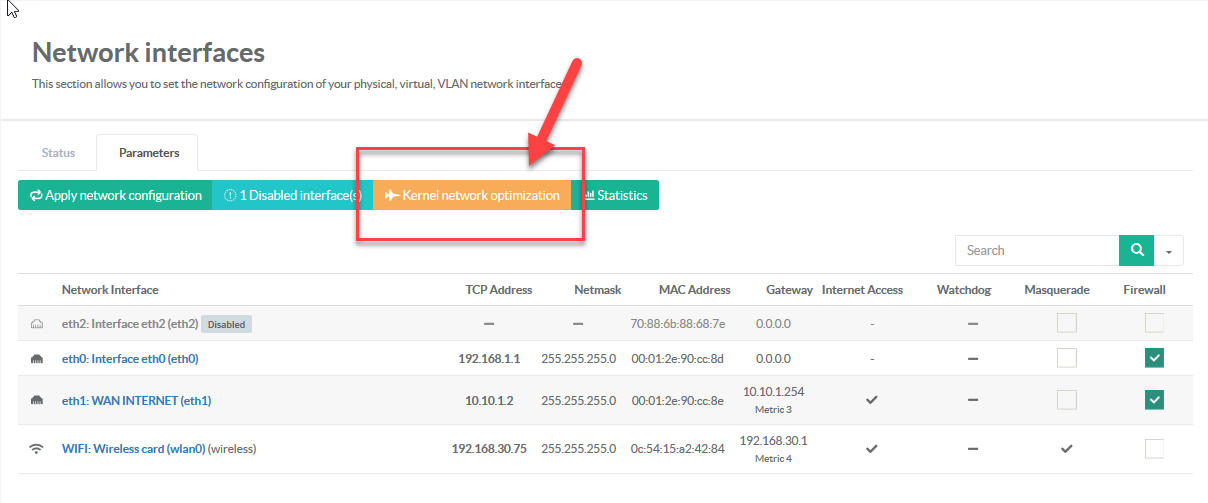

- On the network section, choose “Kernel Network optimization”.

- Turn on the “Enable Kernel Network optimization”

- According the formula about your network capacity, change the value of “Socket Send/receive buffer”

- Click on “Apply” button